Two overarching themes are likely to dominate the investing landscape over 2026 and beyond: 1) the unstoppable rise of artificial intelligence (AI) and 2) the changing global order. These are such complex and dynamic subjects that it is impossible (even unwise) to form a fully-fledged thesis. More important than knowing the terrain is having the right attitude to traverse it.

Here is my ironic take on AI: I am incredibly bullish on what it means for productivity and investment opportunities. But I am tired of reading articles and posts that are clearly written (or heavily edited) by AI. So I am writing this commentary without leaning on AI, even if it means there are a few tpyos!

The impact of AI on businesses and the market is accelerating. Claude Cowork is an AI tool which makes it exponentially easier and faster for both developers and non-developers to build software. Cowork was written by Claude (an AI LLM/agent) in only 10 days, as opposed to the normal development cycle of 3 to 12 months. The ‘Claude Code’ moment went viral on platforms like X, with the Bloomberg Odd Lots podcast capturing the zeitgeist.

Much like the DeepSeek episode in early 2025, social media causes the collective consciousness to narrow its focus with laser-like intensity. Software names have lagged the market for a while, as investors weighed AI disruption risk. The ‘Claude Code moment’ has turned the steady bleed into a January rout. The baby has been thrown out with the bathwater, and there will be long-term winners among the software wreckage, but the ground is treacherous. You could be right in 3 to 5 years, but still be down 50% in 3 months. Tech investor Gavin Baker has an apropos quote for these situations: “Either you panic early, or you double up late – anything in between will get you killed!”

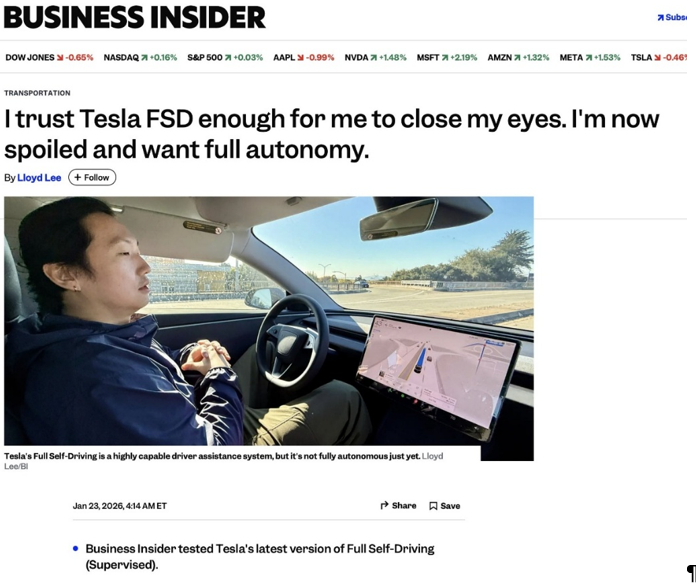

2026 will be the year when we move beyond the AI infrastructure thesis (i.e. semiconductor names like Nvidia and Taiwan Semiconductor Manufacturing Company [TSMC]) and into the AI utilisation thesis. Just a few days ago, two of our portfolio companies provided tangible evidence of what is to come. The founder of AI-powered insurance company Lemonade announced that it would offer cheaper rates for drivers who use Tesla’s Full Self-Driving (FSD) functionality, as it is dramatically safer than humans.

Elon Musk posted about the move on X, which played a part in Lemonade’s share price rising over 23% in just two days. Lemonade is up 26% YTD (to 26 January).

Tesla is getting ever closer to its goal of fully autonomous driving, and is taking incremental steps with its Robotaxi project, with unsupervised rides in Austin, Texas. The future happens slowly, and then all at once.

Corporates (particularly those in the US) will find ways to use AI to boost productivity. This may come at the cost of employment, which will be disorienting at the macroeconomic level. CEOs will be loath to mention AI, as it is likely to become a hot-button political issue if job losses mount. I believe, at least for the foreseeable future, that having the right people will be the unlock (or magnifier) for AI. If I have a workforce of 100 people and they use AI to ramp their revenue-generating capacity from US$500mn to US$1mn per person, then logically the best way to increase my revenue is to hire more people! The same logic applies to GPUs (and compute in general) – if what they are doing is turning energy (watts) into revenue, and energy is the biggest bottleneck, then the goal is to maximise revenue per watt (which is what Nvidia does), not minimise cost per watt. Going back to people: I cannot emphasise enough that the 80:20 principle applies – having the right people with the right attitude is everything. More on this idea later.

A few thoughts on geopolitics. It is becoming increasingly clear that the US does not accept the consensus view of a multipolar world and is reasserting its position as the global hegemon, in the context of its “America-first” strategy. The following article does a great job of laying out the thinking of Elbridge Colby (the architect of the US’s defence strategy) as well as the US’s strategic priorities: The Bridge at the Centre of the Pentagon. To whet your appetite: Colby’s core claim is that US strategy in the 21st century should aim to prevent China from achieving hegemony over Asia. The rest of his framework follows from that point. If you want to go deeper, you can read the actual US policy documents outlining their strategy: 2026 National Defense Strategy and 2025 National Security Strategy.

Now to tie geopolitics, AI and attitudes together.

My sense is that many people (perhaps a majority) are constantly at a stress level of say 6 or 7 out of 10, and are frequently triggered up to 9 out of 10, in part by mainstream and social media. This can be detrimental to your mental, physical and financial health.

The first attitude which is helpful is dispassion (detachment, objectivity, impartiality). Whatever I personally think about US President Donald Trump and his methods is completely irrelevant to my mandate – all that matters is what they mean for financial assets. If anything, I can take advantage of the fact that other people become so emotionally charged that they end up making poor financial decisions. The US’s strategic playbook is still in the early stages of playing out, meaning there is more upheaval and triggering to come.

The same logic applies to individual shares. I buy Tesla because I think the share price will go up, not because I think Elon Musk is the kind of person I would like my daughter to marry.

The next one is not so much an attitude as an orientation: agency. I am often asked if I am worried about AI’s impact in 10 years. First, worrying about the worst case is a waste of time, as you experience the misery twice. Second, the past, present and future will always belong to high agency people. This is what I mean by the right people. People with a victim mindset will see AI as an existential threat; people with a high agency mindset will see AI as an incredible opportunity. Both are right.

Finally, if you are going to be an equity investor, it helps to be an optimist (as I suspect most high agency people are). Human and market history have proven that people are innovative and will always find a way.

I will end with this passage by CS Lewis. He was talking about the atomic bomb, but you can apply his thinking to AI or any other source of dread:

“If we are all going to be destroyed by an atomic bomb, let that bomb when it comes find us doing sensible and human things—praying, working, teaching, reading, listening to music, bathing the children, playing tennis, chatting to our friends over a pint and a game of darts—not huddled together like frightened sheep and thinking about bombs. They may break our bodies (a microbe can do that), but they need not dominate our minds.”